See all blog posts

See all blog posts

Microservice performance that saves you money

NOTICE: This blog post is superseded by a post with more recent performance data. Use the following link to access the blog post that uses the newer performance data. How does Open Liberty’s performance compare to other cloud-native Java runtimes

For many years, the most important metric for application servers was throughput: how many transactions or requests can we squeeze out of this application? The adoption of finer-grained architecture styles, such as microservices and functions, has led to a broadening in the performance characteristics we should care about, such as memory footprint and throughput.

Where you once deployed a small number of runtime instances for your server and application, now you may be deploying tens or hundreds of microservices or functions. Each of those instances comes with its own server, even if it’s embedded in a runnable jar or pulled in as a container image there’s a server in there somewhere. While cold startup time is critically important for cloud functions (and Open Liberty’s time-to-first-response is approximately 1 second, so it’s no slouch), memory footprint and throughput are far more important for microservices architectures in which each running instance is likely to serve thousands of requests before it’s replaced.

Given the expected usage profile of tens to hundreds of services with thousands of requests, reduced memory footprint and higher throughput directly influence cost, both in terms of infrastructure and licenses costs (if you pay for service & support):

-

Memory consumption: This has a direct impact on amount of infrastructure (e.g. cloud) you need to provision.

-

Throughput: The more work an instance can handle, the less infrastructure you require.

The Liberty and OpenJ9 teams have worked extremely hard over the years to make their combined performance world-leading for microservices. Let’s take a look at the latest measurements for these two metrics and compare them against other full Java runtimes.

The results in the following section were all measured on a Linux X86 setup – SLES 12.3, Intel® Xeon® Platinum 8180 CPU @ 2.50GHz, 4 physical cores, 64GB RAM. The JDK versions (and command line settings) were whatever was distributed with the default container images used for each runtime.

Memory - less memory equals cheaper infrastructure costs

Cloud services make the cost and memory footprint correlation abundantly clear. For example, the Azure Kubernetes Service pricing effectively doubles as you double the memory requirements of the nodes. There’s also a correlation between memory and CPUs in the pricing, so this could have a knock-on effect on your license costs. Let’s see how Open Liberty and OpenJ9 can save you memory and money.

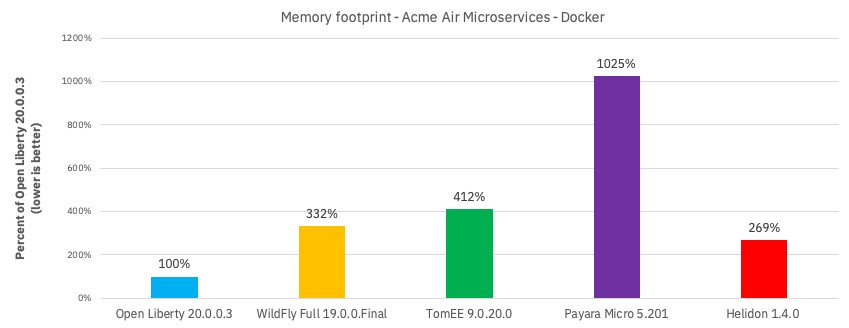

The figure below shows memory footprint comparisons (after startup) for servers running the microservices benchmark Acme Air Microservices. We can see in this scenario that Open Liberty uses 2.5x-10x less memory than the other runtimes we tested:

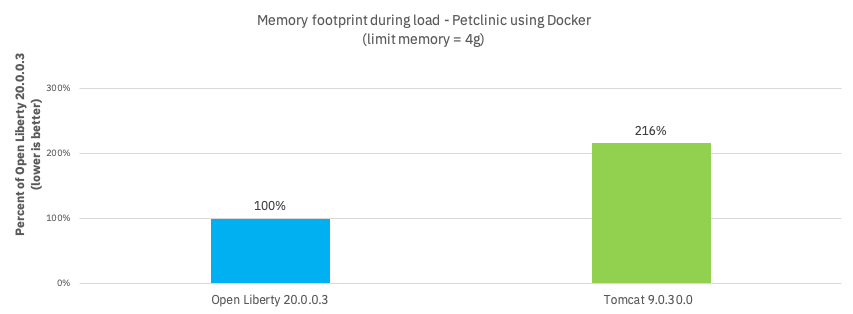

If you’ve chosen Spring Boot for your application (yes, you can use Spring Boot on Open Liberty), then our measurements show an approximately 2x memory footprint benefit from running on Open Liberty rather than Tomcat. For example, the figure below shows the relative memory usage when running the Spring Boot Petclinic application under load with a 4Gb heap:

Throughput - higher throughput equals cheaper infrastructure and license costs

The association between throughput and costs is simple: being able to put more work through a runtime means you can deploy smaller or fewer instances to satisfy demand. You’ll pay less on infrastructure and less on license costs, which are typically based on virtual CPU usage.

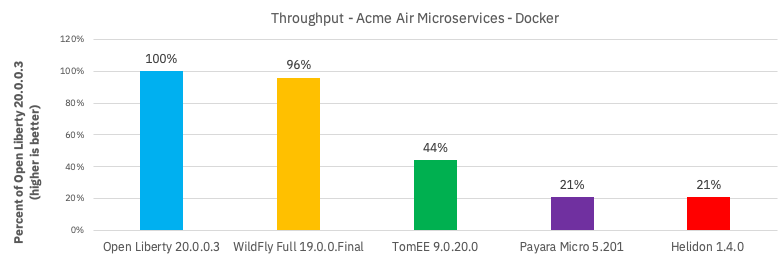

Open Liberty also has significant throughput benefits when compared to other runtimes. The figure below shows throughput measurements for the Acme Air Microservices benchmark. We can see Open Liberty performs better than WildFly and significantly better than the other three runtimes:

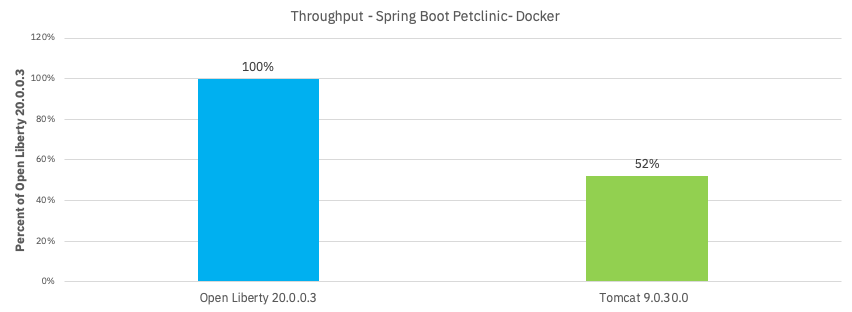

When we compare Spring Boot on Open Liberty throughput with Spring Boot on Tomcat, we can see from the figure below that there is an almost 2x throughput benefit with Open Liberty. This is a similar benefit to that shown in the previous TomEE measurement, which suggests that Open Liberty inherently has a ~2x higher throughput than Tomcat-based runtimes:

Bringing it together

In the previous sections we spoke about the importance of memory and throughput metrics for saving you money on your microservices deployments. We saw individual measurements for each metric, but to get a picture of the overall benefit it’s important to combine the two. To do this, we can simply multiply the two benefits, the results of which are shown in the table below. Of course, your results may vary and we’d recommend trying it out for yourself, but in our measurements, it’s not unrealistic to be able to run your workloads with, at most, a third of the instances you’d need for other full Java runtimes:

| Runtime | Open Liberty memory benefit | Open Liberty throughput benefit | Open Liberty combined benefit |

|---|---|---|---|

WildFly |

3.3x |

1.0x |

3.5x |

TomEE |

4.1x |

2.3x |

9.4x |

Payara |

10.3x |

4.8x |

48.8x |

Helidon |

2.7x |

4.8x |

12.8x |

Spring Boot (Tomcat) |

2.2x |

1.9x |

4.2x |

One final note: although this post has focused on microservices, memory and throughput are also important costs factors for monoliths. We’ve run equivalent benchmarks for monolithic applications and found similar, and in some cases, even better results. So even if you’re happy deploying monoliths, Open Liberty will still save you infrastructure and license costs on those workloads.