See all blog posts

See all blog posts

Calling LLM tools with LangChain4j in MicroProfile and Jakarta EE applications

Modern Large Language Models (LLMs) can do more than just generate text from their pre-trained knowledge—they can also execute functions through a capability called "tool calling." This feature allows LLMs to interact with external systems, access real-time data, and perform actions outside their training data. For Java developers working with MicroProfile and Jakarta EE, implementing this capability has become straightforward with the LangChain4j library.

This post demonstrates how to implement tool calling in Java applications by using LangChain4j, with a practical example that integrates Stack Overflow search capabilities into a MicroProfile and Jakarta EE application. You’ll learn how to define tools as Java methods, connect them to LLM providers like GitHub, Ollama, or Mistral, and build a complete working application.

Understanding LLM tool calling

Tool calling enables LLMs to identify when to call external functions and with what parameters. When an LLM determines that a user query requires information or actions beyond its training data, it generates a structured function call with appropriate arguments rather than attempting to hallucinate an answer.

For example, when asked about current Stack Overflow issues with a specific library, instead of providing potentially outdated information from its training data, the LLM can recognize the need for current information, call an appropriate search function with the correct query parameters, and process the returned data to formulate a response.

Tool-enabled models like Llama 3.1, GPT-4o, Claude 3 Opus, and Mistral Large support this capability through standardized JSON function-calling interfaces. In this tutorial, we’ll use models available through GitHub Copilot, Ollama, and Mistral AI platforms.

LangChain4j: A Java framework for LLM tool integration

Implementing tool calling directly with LLM APIs requires handling complex JSON schemas, managing context windows, and dealing with provider-specific implementations. LangChain4j solves these challenges by providing the following capabilities:

-

A unified Java interface for multiple LLM providers

-

Simple annotations for converting Java methods into LLM tools

-

Automatic handling of parameter serialization and response parsing

-

Memory management for maintaining conversation context

-

Error handling for hallucinated tool calls

The LangChain4j library lets you focus on your business logic rather than LLM integration details. With simple annotations like @Tool and @P, you can expose any Java method to an LLM, making it particularly valuable for MicroProfile and Jakarta EE applications where you might want to integrate AI capabilities with existing enterprise services.

Integrating LangChain4j with MicroProfile and Jakarta EE

LangChain4j integrates seamlessly with MicroProfile and Jakarta EE applications through standard CDI mechanisms. The key components you implement include:

-

Tool definitions - Java methods annotated with

@Toolthat the LLM can invoke -

AI service interfaces - Interfaces that define how your application communicates with the LLM

-

Model configuration - Setup code that connects to your chosen LLM provider

-

Jakarta WebSocket endpoints - For real-time communication between users and the AI

The following sample application demonstrates all these components in a working Stack Overflow search assistant. The complete code is available in the sample-langchain4j/tools repository, which we’ll examine in detail.

For more examples beyond what is covered here, you can also explore the official langchain4j-examples repository.

Try out the AI tools sample application

The sample application demonstrates LLM tool calling in a MicroProfile and Jakarta EE application. This sample implements a chat interface. An AI assistant can search Stack Overflow when asked technical questions, providing up-to-date answers beyond its training data.

By running this example, you see:

-

How tool methods are defined and annotated

-

How the AI decides when to call tools

-

How WebSockets enable real-time AI communication

-

How to configure different LLM providers

Prerequisites and setup

To run this sample, you’ll need:

-

JDK 21 (e.g. IBM Semeru Runtime)

-

Run the following command to set the

JAVA_HOMEenvironment variable:export JAVA_HOME=<your Java 21 home path>

-

-

Git - To clone the sample application repository.

-

Access to an LLM provider - Choose from GitHub, Ollama, or Mistral (setup instructions provided below).

To clone the repository, run the following command:

git clone https://github.com/OpenLiberty/sample-langchain4j.git

cd sample-langchain4j/toolsAI provider setup

The application supports multiple LLM providers with tool-calling capabilities. Choose one of the following options based on your preference:

GitHub setup

-

Sign up and sign in to https://github.com

-

Go to your Personal access tokens

-

Generate a new token with the

modelsaccount permission -

Set the GitHub key environment variable:

export GITHUB_API_KEY=<your GitHub API token>orset GITHUB_API_KEY=<your GitHub API token>

Ollama setup

Mistral setup

-

Sign up and log in to https://console.mistral.ai/home

-

Go to Your API keys

-

Create a new key

-

Set the Mistral API key environment variable:

export MISTRAL_AI_API_KEY=<your Mistral AI API key>orset MISTRAL_AI_API_KEY=<your Mistral AI API key>

Start the application

Start the application, in dev mode:

./mvnw liberty:devWhen you see the following message, the application is ready:

************************************************************************ * Liberty is running in dev mode. * Automatic generation of features: [ Off ] * h - see the help menu for available actions, type 'h' and press Enter. * q - stop the server and quit dev mode, press Ctrl-C or type 'q' and press Enter. * * Liberty server port information: * Liberty server HTTP port: [ 9080 ] * Liberty server HTTPS port: [ 9443 ] * Liberty debug port: [ 7777 ] ************************************************************************

To ensure that the application started successfully, you can run the tests by pressing the enter/return key from the command-line session. If the tests pass, you can see output similar to the following example:

[INFO] ------------------------------------------------------- [INFO] T E S T S [INFO] ------------------------------------------------------- [INFO] Running it.io.openliberty.sample.langchain4j.ToolServiceIT [INFO] ... [INFO] Tests run: 3, Failures: 0, Errors: 0, Skipped: 0, Time elapsed: 10.14 s... [INFO] Results: [INFO] [INFO] Tests run: 3, Failures: 0, Errors: 0, Skipped: 0

Access the application

When the application is running, open a browser at http://localhost:9080/toolChat.html and start experimenting with it.

Enter any text to chat with the AI assistant. Here are some suggested messages:

-

What are some current problems users have with LangChain4j? -

What are some problems users have with Ollama?

Expect to see logs in the dev mode console to see that a tool is being called.

How does the application work?

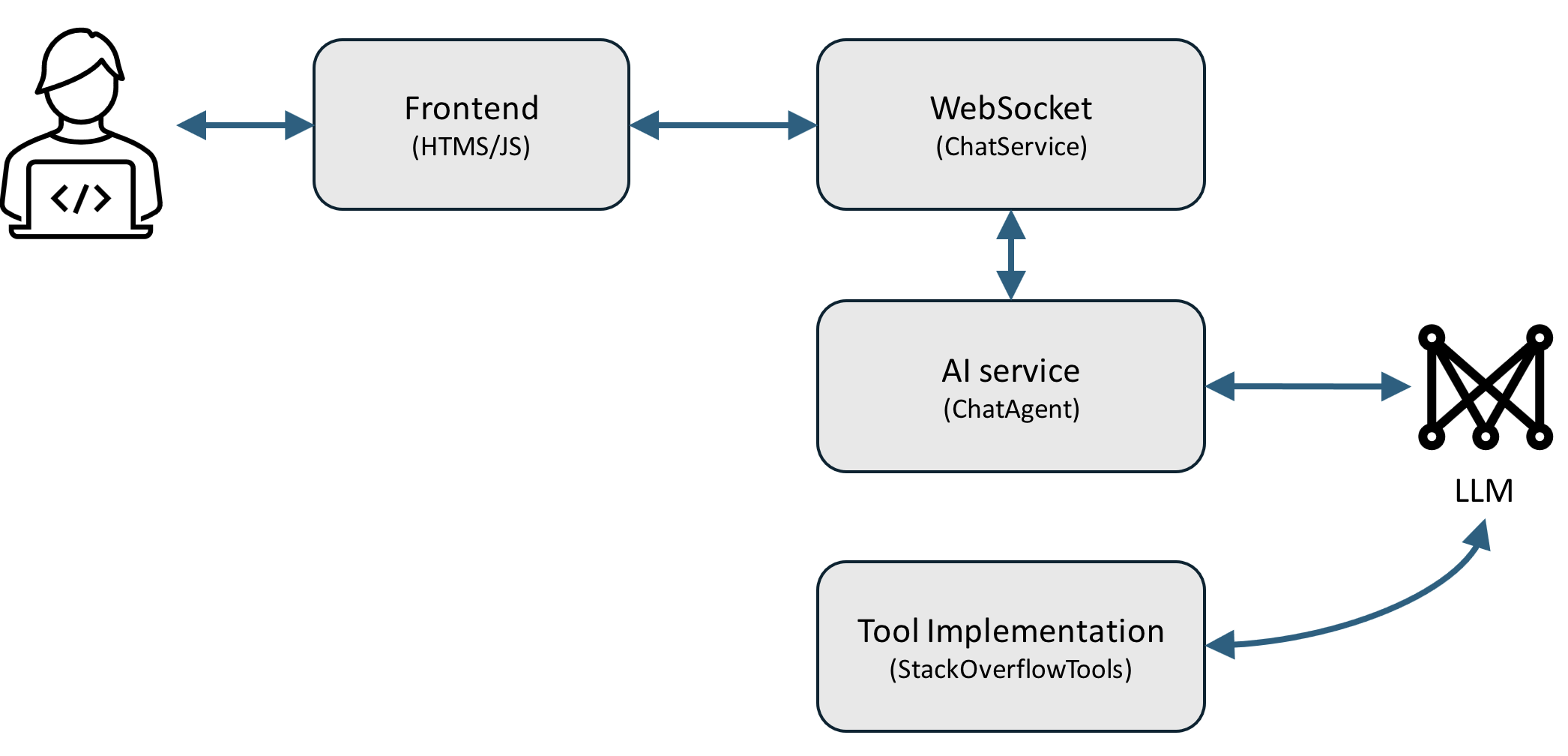

The application architecture consists of four main components that work together to provide an AI assistant with tool-calling capabilities:

-

Tool implementation (

StackOverflowTools.java) - Java methods annotated to be callable by the LLM -

AI service (

ChatAgent.java) - Configures the LLM and exposes it through a Java interface -

WebSocket endpoint (

ChatService.java) - Handles real-time communication with clients -

Front end (

toolChat.jsandtoolChat.html) - Provides the user interface

When a user sends a message through the WebSocket, the following sequence occurs:

-

The message is received by the

ChatServiceendpoint. -

The message is forwarded to the

ChatAgent, which sends it to the configured LLM. -

The LLM analyzes the message and determines if it needs to call a tool.

-

If needed, the LLM generates a tool call with appropriate parameters.

-

LangChain4j runs the corresponding Java method with those parameters.

-

The tool result is returned to the LLM, which incorporates it into its response.

-

The final response is sent back to the client through the WebSocket.

This implementation uses the Stack Overflow API to enhance the LLM’s capabilities. When the user asks a technical question that might benefit from current Stack Overflow data, the LLM calls the appropriate search method:

-

The search queries the Stack Exchange API to find relevant questions.

-

The search retrieves the top answer for each question.

-

The search returns the formatted results to the LLM.

-

The LLM then synthesizes the returned information into a coherent response.

Tool implementation

The core of tool functionality is implemented through Java methods annotated with LangChain4j’s @Tool and @P annotations:

@Tool("Search for questions and answers on stackoverflow")

public ArrayList<String> searchStackOverflow(@P("Question you are searching") String question) throws Exception {

logger.info("AI called the searchStackOverflow Tool with question: " + question);

String targetUrl = stackOverflowMethod + "&q=" + question;

return questionAndAnswer(targetUrl);

}These annotations serve critical purposes:

-

@Toolannotation provides a description that helps the LLM understand when the tool is appropriate to use, what functionality it provides, and what the expected output format is. -

@Pannotation describes each parameter so the LLM can understand what information to provide, format the parameter correctly, and extract relevant details from the user’s query.

The implementation uses standard Jakarta EE HTTP Client APIs to interact with the Stack Exchange API:

private ArrayList<String> questionAndAnswer(String url) throws Exception {

ArrayList<String> questionAnswer = new ArrayList<>();

for (Map<String, Object> data : clientSearch(url)) {

String topAnswer = clientSearch(String.format(findAnswer, data.get("question_id")))

.get(0).get("body").toString();

questionAnswer.add(

"Link: " + "https://stackoverflow.com/questions/" + data.get("question_id") +

" Problem: " + data.get("body") +

" Answer: " + topAnswer

);

}

return questionAnswer;

}The method returns results in a format that is easily parseable by the LLM, contains all necessary context (question, answer, and link), and maintains a consistent structure for reliable processing.

When implementing tools, consider the following good practices:

-

Keep tool methods focused on a single responsibility.

-

Return structured data that is easy for the LLM to process.

-

Include sufficient context in the return value.

-

Use descriptive annotations that clearly explain the functions.

-

Handle exceptions to prevent LLM confusion.

See the complete implementation in StackOverflowTools.java.

AI service implementation

The ChatAgent class serves as the bridge between your application and the LLM. It handles LLM provider configuration, tool registration, conversation memory management, and error handling for hallucinated tool calls.

The core configuration is done through LangChain4j’s builder pattern:

public Assistant getAssistant() {

ChatModel model = modelBuilder.getChatModel();

assistant = AiServices.builder(Assistant.class)

.chatModel(model)

.tools(stackOverflowTools)

.hallucinatedToolNameStrategy(toolExecutionRequest ->

ToolExecutionResultMessage.from(toolExecutionRequest,

"Error: there is no tool with the following parameters called " +

toolExecutionRequest.name()))

.chatMemoryProvider(

sessionId -> MessageWindowChatMemory.withMaxMessages(MAX_MESSAGES))

.build();

return assistant;

}Key configuration elements include the following methods:

-

tools(), which registers your tool classes with the LLM. -

hallucinatedToolNameStrategy, which provides graceful error handling when the LLM attempts to call a non-existent tool (a common problem with LLMs). -

chatMemoryProvider, which configures conversation history retention; this is essential for maintaining context across multiple messages.

The AI service is exposed through a Java interface with LangChain4j annotations:

interface Assistant {

@SystemMessage("You are a coding helper, people will go to you for questions around coding. " +

"You have ONLY four tools. " +

"ONLY use the tools if NECESSARY. " +

"ALWAYS follow the tool call parameters exactly and make sure to provide ALL necessary parameters. " +

"Do NOT add more parameters than needed" +

"NEVER give the user unnecessary information. " +

"NEVER lie or make information up, if you are unsure say so.")

String chat(@MemoryId String sessionId, @UserMessage String userMessage);

}The @SystemMessage annotation provides critical instructions to the LLM about its role and purpose, when and how to use tools, constraints on its behavior, and error handling expectations.

The @MemoryId annotation ensures each user gets a separate conversation history, while @UserMessage marks the parameter that contains the user’s input.

See the complete implementation in ChatAgent.java.

Client and LLM communication with WebSockets

The application uses Jakarta WebSocket to provide real-time communication between the client and the AI service. This approach offers several advantages over traditional REST endpoints:

-

Real-time interaction - Immediate responses without polling

-

Persistent connection - Reduced overhead for multiple messages

-

Session management - Easy tracking of conversation state

The server-side implementation uses standard Jakarta WebSocket annotations:

@ServerEndpoint(value = "/toolchat", encoders = { ChatMessageEncoder.class })

public class ChatService {

@Inject

ChatAgent agent = null;

@OnMessage

public void onMessage(String message, Session session) {

...

try {

answer = agent.chat(sessionId, message);

} catch (Exception e) {

...

}

try {

session.getBasicRemote().sendObject(answer);

} catch (Exception e) {

...

}

}

// Other WebSocket lifecycle methods...

}Key implementation details:

-

Each WebSocket connection gets a unique

sessionIdthat’s passed to the AI service to maintain conversation context. -

Robust error handling ensures users receive appropriate feedback even when issues occur.

-

The asynchronous WebSocket architecture naturally supports the potentially long processing times of LLM requests.

The client-side JavaScript establishes the WebSocket connection and handles message exchange:

const webSocket = new WebSocket(uri);

webSocket.onmessage = function(event) {

// Display AI response

displayMessage(event.data, "ai");

};

function sendMessage() {

var myMessageRow = document.createElement('tr');

var myMessage = document.getElementById('myMessage').value;

myMessageRow.innerHTML = '<td><p class=\"my-msg\">' + myMessage + '</p></td>' +

'<td>' + getTime() + '</td>';

messagesTableBody.appendChild(myMessageRow);

messagesTableBody.appendChild(thinkingRow);

webSocket.send(myMessage);

document.getElementById('myMessage').value = "";

}This bidirectional communication channel creates a responsive chat experience while the underlying tool-calling functionality happens transparently to the user.

See the complete implementations in ChatService.java and toolChat.js.

Conclusion: Building AI-powered Java applications with tool calling

In this tutorial, we’ve explored how to implement LLM tool calling capabilities in MicroProfile and Jakarta EE applications using LangChain4j. By following the implementation patterns demonstrated here, you can extend your own applications with AI capabilities that go beyond simple text generation.

We’ve covered the following key technical concepts:

-

Using

@Tooland@Pannotations to expose Java methods to LLMs -

Setting up LangChain4j with appropriate model settings and tool registrations

-

Implementing strategies for handling hallucinated tool calls

-

Creating real-time communication channels between users and AI services by using WebSockets

-

Maintaining conversation context memory across multiple interactions

The approach demonstrated here offers several advantages for Java developers:

-

Separation of Concerns - Your business logic remains in standard Java methods, cleanly separated from AI integration.

-

Provider Flexibility - The same code works with multiple LLM providers (GitHub, Ollama, Mistral).

-

Enterprise Integration - The LLM calls can be seamlessly integrated into existing Jakarta EE and MicroProfile applications

-

Extensibility - It’s easy to add new tools as your application’s requirements evolve

Ways in which you could extend this tool calling example for your own applications include:

-

Creating tools that interact with your application’s databases.

-

Implementing tools that trigger business processes or workflows.

-

Adding tools that access internal knowledge bases or documentation.

-

Developing domain-specific tools for your particular industry.

The combination of modern LLMs with Jakarta EE’s enterprise capabilities opens up powerful new possibilities for building intelligent applications that can take actions, access real-time data, and provide more valuable responses to users.

Where to next?

Check out the Open Liberty guides for more information and interactive tutorials that walk you through other Jakarta EE and MicroProfile APIs with Open Liberty.